Mechanisms of Verifications and Backtracking #6

Replies: 13 comments 24 replies

-

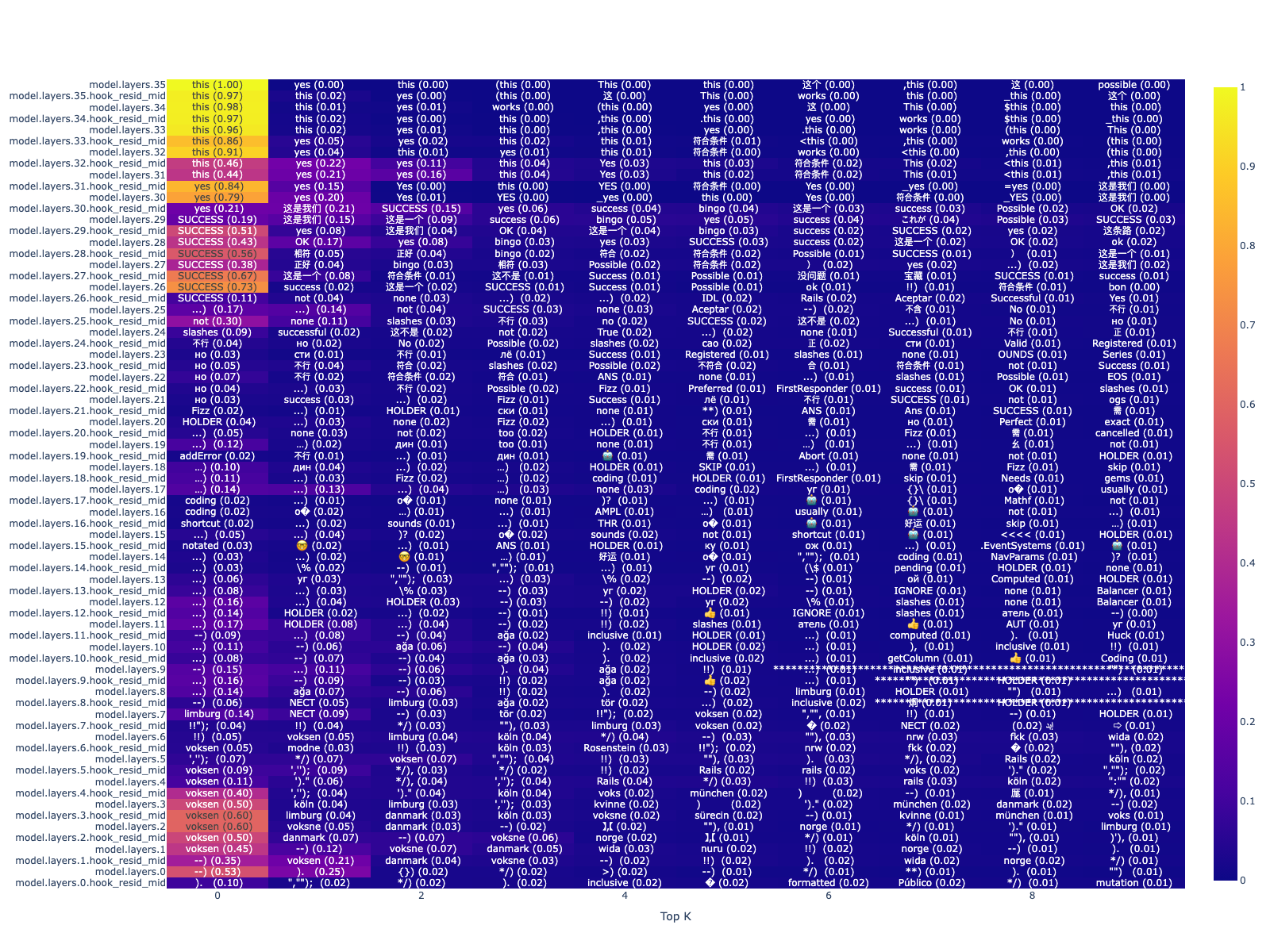

Model:First of all, a huge shoutout to Jiayi-Pan's reproduction of R1 on a simple "count down" task. While training a model using Jaiyi's code, R1-Zero's CoT starts off looking something like this: Luckily, with a long enough training, the model's CoT converges to a very structured output: Yay! This allows us to analyze the model's CoT in a more structured manner. Linear Probing.Let's go to our bread and butter: linear probing. As it turns out, we can fit a linear probe with near perfect accuracy in almost all layers (every layer starting from the 4th). What do these vectors encode?Let's notate our linear probe at a specific layer as What happens if we project each of these vectors onto the model's token embedding space?

Ok great! So these vectors seem to be encoding some information relevant to verifying a response as correct or incorrect. Steering with Verification VectorsOk so let's see if we can steer the model's CoT with these vectors. Below I am showing results before and after steering. I've omitted the beginning of the CoT for brevity, where it says "We have numbers x, y, z,... we need to use all of them to..."

Interestingly, in the last example, we got the model to say "! Indeed,", but in generating additional CoT tokens, it corrected itself.

Additional ThoughtsIs So then we're simply increasing the likelihood of synonyms of "correct" and "incorrect"? Also, couldn't we have just prompted with something like "...(some CoT)... exactly!" to steer the model? However, the point of this exercise was to find a starting point for how the model is verifying its own chain-of-thought tokens. |

Beta Was this translation helpful? Give feedback.

-

GSM8K based steering vectors for Deepseek-R1-Distill-Llama-8BGoogle Colab for reproducing the main results TLDR; Correct R1-Llama-8B responses on GSM8K usually repeat the correct solution within the thought process many times. Computing a steering vector by taking the difference between activations of the end of sentence of the last occurrence of the answer and the first occurrence (over a few GSM8K examples) gives a steering vector that can end / prolong R1-Llama-8B responses on a GSM8K holdout set. Preliminary results on other tasks suggest that the steering vectors work there as well (but worse). Main result: Steering effectiveness (first two columns steering is successful when <0.5 and last when >0.5) Detailed write-up: * #8 |

Beta Was this translation helpful? Give feedback.

-

|

Are there any results about the task-specificity of these steering vectors? (Just joined, so I hope I am using the git here correctly. lmk please if not :-) ) |

Beta Was this translation helpful? Give feedback.

-

|

@ajyl what do you think about starting weekly meetings / more explicitly inviting collaborators on this? |

Beta Was this translation helpful? Give feedback.

-

|

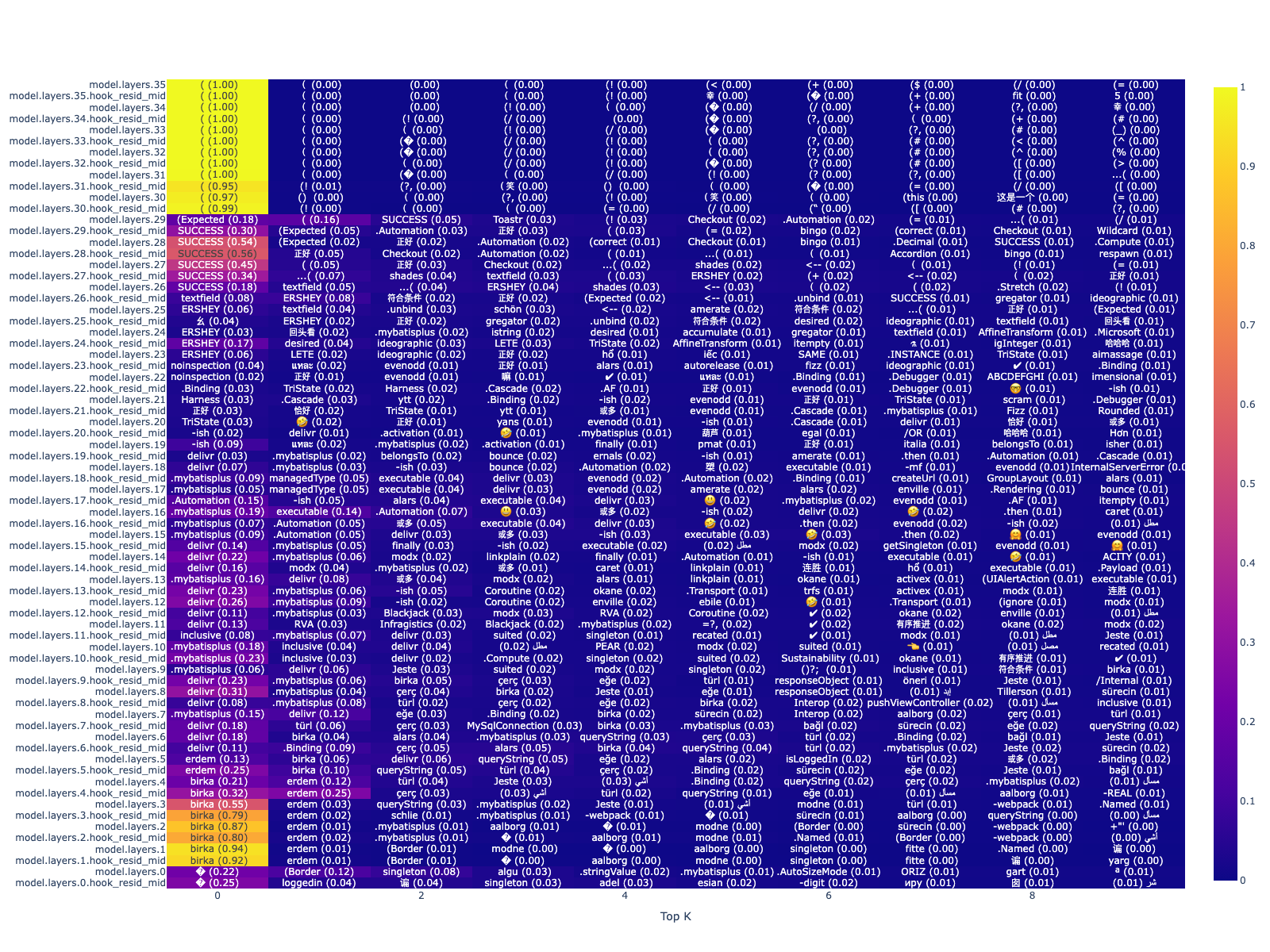

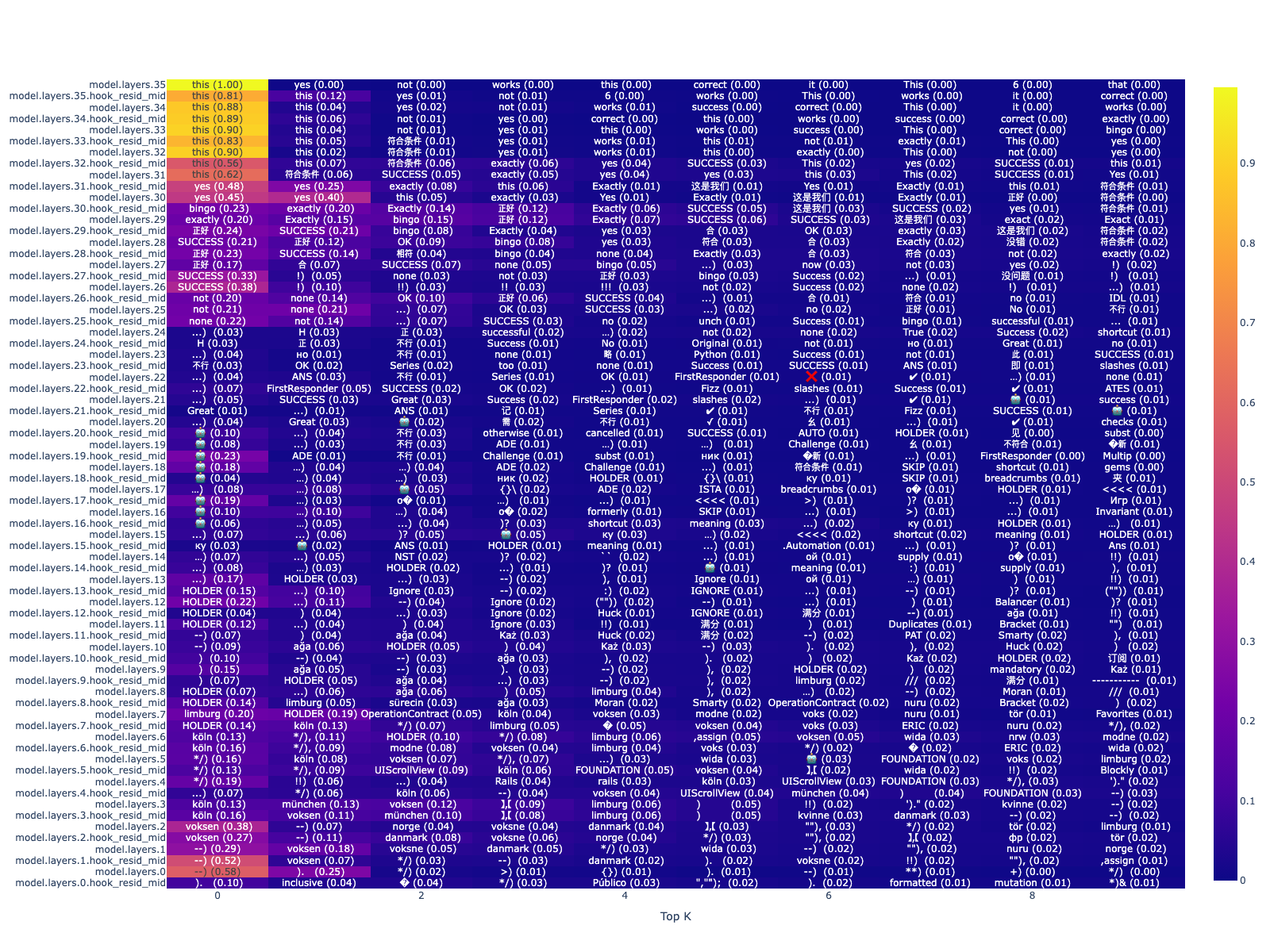

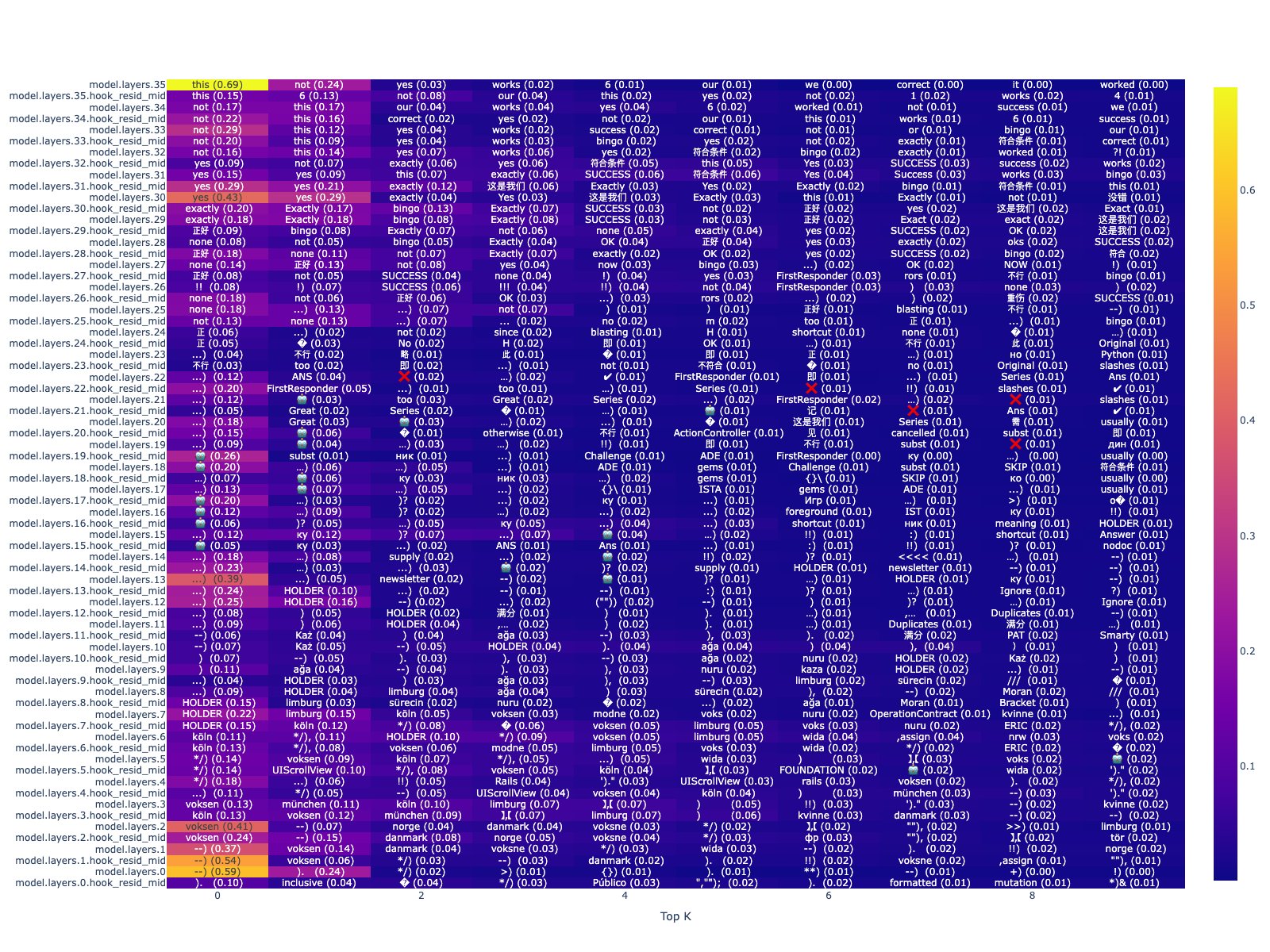

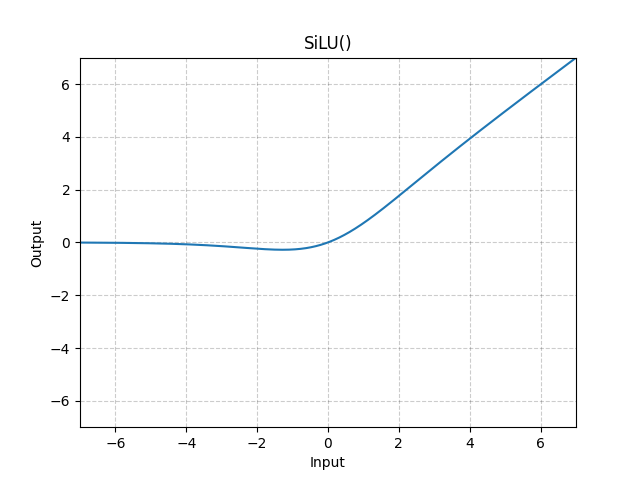

I have some new experiments!! I'll cross-post here too: ContextThis is Part II of investigating how R1 does verifications. How does R1 know it's found a solution?R1 will often claim that it's found a solution. Currently I am working with a hypothesis that R1 has some internal representation for "I've found and verified a solution" (let's call this TODO), and that this representation generates "solution tokens" (such as "Therefore the answer is..."). Here I present some of my thoughts on the latter part -- how "solution tokens" are generated. (Recap from Part I) Model:As a reminder, we are working with a model trained on a specific reasoning task (a countdown task). Luckily, this means we know the exact token to look for when the model has found a solution. Thus, we have a specific timestep (right when a "(" is predicted, and when "this" will be predicted next) where we can do a deep dive. Good old LogitlensSo let's start by seeing what's encoded at each layer, right before "this works" is generated. (I recommend that you view it via this interactive html link) So we see some interesting tokens show up in mid layers, like "success", "OK", "bingo", "yes". Interestingly, even in the previous timestep (when it's predicting the "(" token), you can see words like "success" still show up: In this post I will discuss the role that MLP blocks play in generating the tokens seen in mid layers, as well as potentially the final predictions. Looking at MLP "Value Vectors"I like to think of MLP blocks by rows and columns: I will refer to Put differently, we can think of the output of an MLP as a weighted sum of the value vectors, where the weights are given by the dot product of the input and key vectors. So it turns out that you can also project your value vectors onto the token embedding space, and sometimes you'll see coherent semantic clusters show up. But which value vectors should we look at? In the case of Qwen2.5-3B, we have 36 layers, each with 11008 value vectors. Instead, we can rely on our probe (see Part I) to guide us. Here are some examples: For

For

Great, so we can localize where the tokens from logitlens are coming from. To confirm that these value vectors are responsible for the tokens showing up in mid-layers, we can do an intervention. Interventions on Value VectorsDuring the forward pass, we can zero out the value vectors that we think might be important. Specifically, we can look at value vectors in the second half of the model (layers 18~36). Below is what happens when $k = 50% (and therefore we're zeroing out 50 * 18 = 900 out of 396288 (11008 * 36) value vectors. That's about 0.2% of the total value vectors.): From this, we can see that while the model still predicts "this", the likelihood of "success" and similar tokens showing up in the mid layers is reduced (It may be a bit hard to compare, but for instance, layer 26 --> P("SUCCESS") drops from 0.73 to 0.38). Though we can do this for larger values of Interestingly, we can further zero out value vectors that are also similar to With this, we see a more pronounced effect. Wait, why do zeroing out value vectors similar to

|

| Layer, Index | Nearest Neighbors (k = 10) |

|---|---|

| MLP[27, 4971] | ' inefficient', '没能', 'inity', '不方便', 'Danger', ' disadvantage', '不利于', '还不如', ' challenged', 'ขาด' ' inefficient ', 'failed ', 'inity ', 'inconvenient ', 'Danger ', ' disadvantage ', 'unfavorable ', 'not as good as ', ' challenged ', 'ขาด ' |

| MLP[27, 4971] * -1 | ' successfully', ' successful', '顺利', '成功', '删除成功', '良好', ' succesfully', '.success', 'successfully', 'successful' ' successfully', ' successful', 'smooth', 'successful', 'successful deletion', 'good', ' succesfully', '.success', 'successfully', 'successful' |

| MLP[26, 744] | '未能', '不够', ' nicht', '不像', '达不到', '不清楚', '不具备', '不到位', '不符', '还不' 'failed', 'not enough', ' nicht', 'not like', 'cannot reach', 'not clear', 'not available', 'not in place', 'not in line', 'not yet' |

| MLP[26, 744] * -1 | '慎', '足', '同等', ' tend', 'ONDON', '足以', '均衡', '谨慎', ' equal', 'fast' 'careful', 'sufficient', 'equal', 'tend', 'ONDON', 'enough', 'balanced', 'cautious', 'equal', 'fast' |

| MLP[23, 7356] | '不利于', '造成了', '会造成', '严重影响', '禁止', ' threaten', ' threatens', '不可避免', '严重', ' caution' 'unfavorable', 'caused', 'will cause', 'seriously affect', 'prohibit', 'threaten', 'threatens', 'inevitable', 'serious', 'caution' |

| MLP[23, 7356] * -1 | '从容', ' nhờ', 'rar', ' grâce', ' waive', '就好了', '无忧', ' easier', '有信心', 'kek' 'calm', 'nhờ', 'rar', 'grâce', 'waive', 'just fine', 'worry-free', 'easier', 'confident', 'kek' |

| MLP[26, 6619] | '缺乏', '缺少', '不方便', ' lacks', '难以', '未能', '无法', ' lack', '得不到', '不符合' 'lack', 'lack', 'inconvenience', 'lacks', 'difficult', 'failed', 'cannot', 'lack', 'cannot get', 'not in line with' |

| MLP[26, 6619] * -1 | '不仅能', '不错的', '不错', '具有良好', '总算', '这样才能', '有足够的', '良好', '不仅可以', ' sane' 'not only can', 'good', 'good', 'have good', 'finally', 'only in this way', 'have enough', 'good', 'not only can', 'sane' |

| MLP[27, 9766] | '是不可能', ' neither', '看不到', '不存在', '是不会', ' nowhere', ' nothing', ' none', '没有任何', '都没有' 'It's impossible', 'neither', 'can't see', 'doesn't exist', 'will never', ' nowhere', ' nothing', ' none', 'nothing', 'nothing' |

| MLP[27, 9766] * -1 | 'might', ' maybe', 'may', 'maybe', ' might', 'Maybe', '有时候', '部分地区', ' may', '.some' 'might', ' maybe', 'may', 'maybe', ' might', 'Maybe', 'Sometimes', 'Some areas', ' may', '.some' |

It turns out the antipodes of some of the negative value vectors are encoding words you would expect from positive value vectors!

So not only are we seeing positive value vectors being activated to promote tokens like ("success"), but inactive negative value vectors also get flipped to promote similar tokens.

Same thing with flipping positive value vectors:

| Layer, Index | Nearest Neighbors (k = 10) |

|---|---|

| MLP[30, 8233] | ' correctly', '正确', '恰当', ' accurately', '符合', '合适', ' properly', ' adequately', '准确', ' accurate' ' correctly', 'Correct','appropriate', ' accurately', 'fit', 'appropriate', ' properly', ' adequately', 'accurate', ' accurate' |

| MLP[30, 8233] * -1 | ' wrong', '不良', ' incorrect', 'wrong', ' invalid', ' bad', ' inappropriate', 'invalid', '不合格', '不当' 'wrong', 'bad', 'incorrect', 'wrong', 'invalid', 'bad', 'inappropriate', 'invalid', 'unqualified', 'inappropriate' |

| MLP[26, 6475] | '倒是', '不失', '适度', 'successful', '.success', '却是', ' successful', '没事', '完好', '还不错' 'It is', 'not lost', 'moderate', 'successful', '.success', 'but it is', ' successful', 'nothing', 'intact', 'not bad' |

| MLP[26, 6475] * -1 | ' also', ' likewise', 'Also', '也同样', ' тоже', ' também', '也非常', ' également', ' ALSO', ' Also' ' also', ' likewise', ' Also', ' also', ' тоже', ' também', ' also very', ' également', ' ALSO', ' Also' |

| MLP[29, 6676] | ' yes', ' Yes', 'Bindable', ' exactly', 'Yes', '"Yes', 'yes', ' Yep', ' Exactly', ' included' |

| MLP[29, 6676] * -1 | '都不', '不太', ' neither', '不予', '没见过', '都不是', '不曾', '不开', '不具备', '都没有' 'neither', 'not quite', 'neither', 'not given', 'never seen', 'neither', 'never', 'not open', 'not possess', 'none' |

| MLP[25, 1124] | 'ELY', '怫', 'yes', ' Awesome', 'itchen', '没错', 'etur', '即时发生', 'ños', 'emade' 'ELY', '怫', 'yes', ' Awesome', 'itchen', 'That's right', 'etur', 'Immediate', 'ños', 'emade'] |

| MLP[25, 1124] * -1 | '根本', 'Prom', ' Prom', ')NULL', '">×<', '都不', '丝毫', 'ari', ' never', '抑制' 'at all', 'Prom', ' Prom', ')NULL', '">×<', 'neither', 'slightly', 'ari', 'never', 'restrain' |

| MLP[27, 10388] | ' mirac', '乐观', '安然', ' Relief', '幸', '.isSuccess', '.SUCCESS', ' favorable', '优点', '顺利' ' mirac ', ' Optimistic ', ' Safe ', ' Relief ', ' Luck ', '.isSuccess ', '.SUCCESS ', ' favorable ', ' Advantages ', ' Smooth ' |

| MLP[27, 10388] * -1 | '失败', ' failure', '不良', '不利', '糟糕', '失误', ' failures', ' bad', '失', '失望' 'failure', ' failure', 'bad', 'unfavorable', 'bad', 'mistake', ' failures', ' bad', 'loss', 'disappointment' |

Again, sometimes (but not always!) the antipodes of value vectors encode the semantically opposite words.

Conclusion

So we see that value vectors play some role in the model generating "solution tokens".

However, is it part of verification?

Rather, there seems to be some representation prior to these value vectors that are activating them in the first place.

The activation of value vectors seem to reinforce the model's decision to predict "this" or "not", and so we might characterize them as being a component of verification.

However, we don't have the full story quite yet.

How does the model know when to activate these value vectors?

The answer is likely in the attention heads, which I plan to investigate next.

Collaborating

I would love to hear your thoughts!

Please see the ARBOR page for discussions.

Beta Was this translation helpful? Give feedback.

-

|

We are starting to host weekly open meetings! Mondays 2pm ET: https://discord.gg/nVrZ3Pw3?event=1348728274737696838 Anyone is welcomed to join! |

Beta Was this translation helpful? Give feedback.

-

|

I did some experiments in #22 following the colab code shared by @wendlerc . Here are some short summaries:

In the todo list, direction 2 would be interesting to form some utility out of this, though i imagine it would be challenging and require some tricks. For direction 4, one thing to try would be to test if the model actually backtracks by inserting mistakes into the solution right before it backtracks and feed it in to the model. Does it self-correct the mistake? And does this self-correction becomes weaker as we insert into later parts of backtracking? Would love to hear your thoughts on this! |

Beta Was this translation helpful? Give feedback.

-

|

Hi @wj210 -- welcome!! Awesome experiments. My first question is, are you able to join our weekly meetings by chance? |

Beta Was this translation helpful? Give feedback.

-

|

I've started a repo to track our experiments. It's rather messy now but I hope to clean it up as we go. https://github.com/ajyl/verify_circuit/tree/main |

Beta Was this translation helpful? Give feedback.

-

|

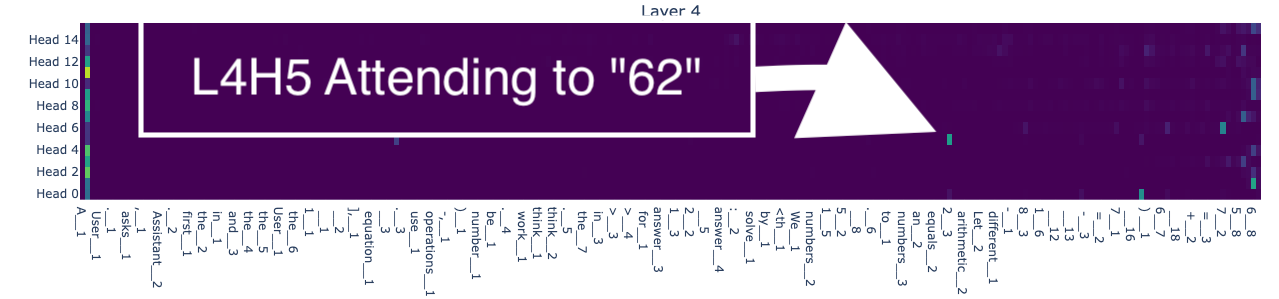

I have some new experiments: ContextThis is Part III of investigating how R1 does verifications. (Recap from Part I) Model:As a reminder, we are working with a model trained on a specific reasoning task (a countdown task). Luckily, this means we know the exact token to look for when the model has found a solution. Thus, we have a specific timestep (right when a "(" is predicted, and when "this" will be predicted next) where we can do a deep dive. In Part II, we looked at how MLP value vectors promote the likelihood of tokens such as "success" or "yes", or "incomplete" or "failed". In this post we will look at the role of attention in verification. Attention HeadsSince our model outputs are nicely structured, we simply have to inspect the attention patterns right after the model has outputted the correct numeric answer. Consider the following example: Let's look at the attention patterns right after 62 (the correct answer) is outputted. One thing you may notice is that some heads attend to the previous occurrences of the correct answer (62)! Examples of this are L3H13, L4H0, L4H5, L5H9, and so on. So let's try to turn off the "O-circuit" of these attention heads (i.e., these heads won't be able to write into the residual stream). (I just arbitrarily picked attention heads based on the visualization above. We will likely need a more systematic analysis later.) And here is the output after turning off these attention heads: Note the text marked in asterisks -- the model is now outputting the correct answer, but it is not recognizing it as such! Relation to MLP Value VectorsPreviously, we identified MLP value vectors promoting tokens like "success" or "failed". Here, we can simply compare the activation of the value vectors before and after the attention heads are turned off: As you can see, the value vectors corresponding to "success" etc. are no longer activated when the attention heads are turned off. Next StepsThe biggest question that arises is, what about problems where the correct answer is not in the context? Another question is to compare the model before and after RL fine-tuning. Alternatively, is the verification mechanism of the base model the same as the RL model? Lastly, we should probably set up a more systematic experiments to write up our findings in a potential paper. CodeMy code and experiments can be found here. |

Beta Was this translation helpful? Give feedback.

-

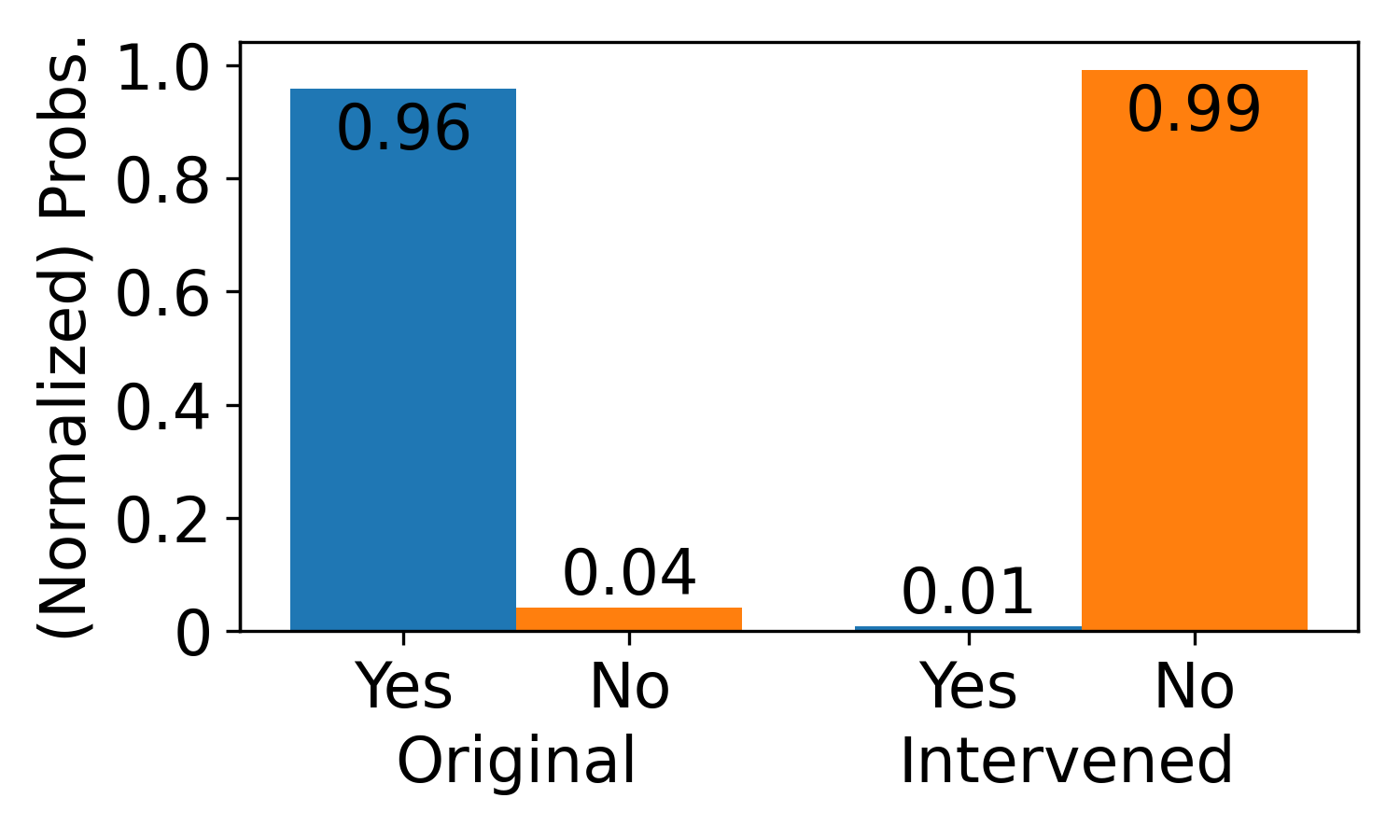

Comparing the Verification Mechanism of R1 vs. Base ModelHere I verify that the verification mechanism of R1 and its base model counterpart are the same. Comparing Weights of R1 vs. Base ModelPreviously, we found some relevant weights for verification in our TinyZero-R1. We can simply check cosine-similarity scores and norms of these weights. This suggests that we should expect to see a similar verification mechanism in our base model as well. Verifying Verification Mechanism in Base Model.So previously we found attention heads doing verification. We're turning off 29 (out of 576) attention heads. With the base model (Qwen2.5-3B), we can simply take CoT attempts from the R1 model and ask the base model to verify them. Here's an example: Below are the results before and after our interventions: Great, so all of this suggests that the verification mechanism in the base model is the same as in the R1 model. Next StepsAgain, the biggest question that arises is, what about problems where the correct answer is not in the context? Code |

Beta Was this translation helpful? Give feedback.

-

|

(Deprecated - leaving here just in case) TODO Items (help appreciated)(In arbitrary order)

|

Beta Was this translation helpful? Give feedback.

-

|

Using the steering vector from @wendlerc, we can increase the performance of R1-Llama-8B on MATH500 by steering in the 'Reconsideration' direction!

More generally, the 'Reconsideration' direction trades off accuracy with CoT length. See experiments here If you are still meeting today (monday) 3pm ET and are interested in this result I can describe it better! |

Beta Was this translation helpful? Give feedback.

-

(Last updated: March 21)

Weekly meeting link: https://discord.com/events/1092554540231962686/1348728274737696838

Research Question

How do reasoning models verify their CoTs? How do they backtrack to a different solution?

Owners

Chris Wendler (@wendlerc), Andrew Lee (@ajyl), Harsh Raj

Contributors

Actively Seeking! Please reach out to Andrew on Discord.

Project Status

Work in Progress

Current Findings

Feb 17.

Chris and Andrew have independently found steering vectors that can make the model think that it has found a solution.

Chris has done so with taking contrastive pairs on a R1 model for the GSM8K tasks (see #8), while Andrew has done so by training linear probes on a task-specific reasoning model (see discussion thread below).

Feb 28.

Some progress in understanding why tokens related to verification (eg., "success", "ok", "complete", as well as "not", "failed") show up mid layers when toy model is logit-lensed.

Namely, I believe it's because the "W_out" weights of the MLP have vectors ("value vectors") that seem to encode semantic clusters relating to verification.

For details, see blog post.

Mar 19.

When the solution is in the context, attention heads verify the model's outputs with the solution in the context.

Turning off these heads will make the model think that its correct output is incorrect, and continue CoT attempts.

For details, see blog post.

Mar 21.

The R1 model and base model have the same verification circuits. see blog post.

Mar 31 Meeting Notes

We have a complete story for the CountDown task for Qwen. However, CountDown is a "context-based" verification task. We want to 1) understand verification for a prior-based task, or 2) understand verification in "in-the-wild" models.

Next steps:

Experiments

TODO items

Please ping Andrew on Discord to contribute!

Discord

https://discord.com/channels/1092554540231962686/1341439431030210570

Code

https://github.com/ajyl/verify_circuit/tree/main

Beta Was this translation helpful? Give feedback.

All reactions