-

Notifications

You must be signed in to change notification settings - Fork 102

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Returning a tuple will affect performance #330

Comments

|

Tuples are generally cheap (and don't require heap allocations) in Julia, so I'm skeptical that this is the source of your problem here. Have you checked type stability with |

|

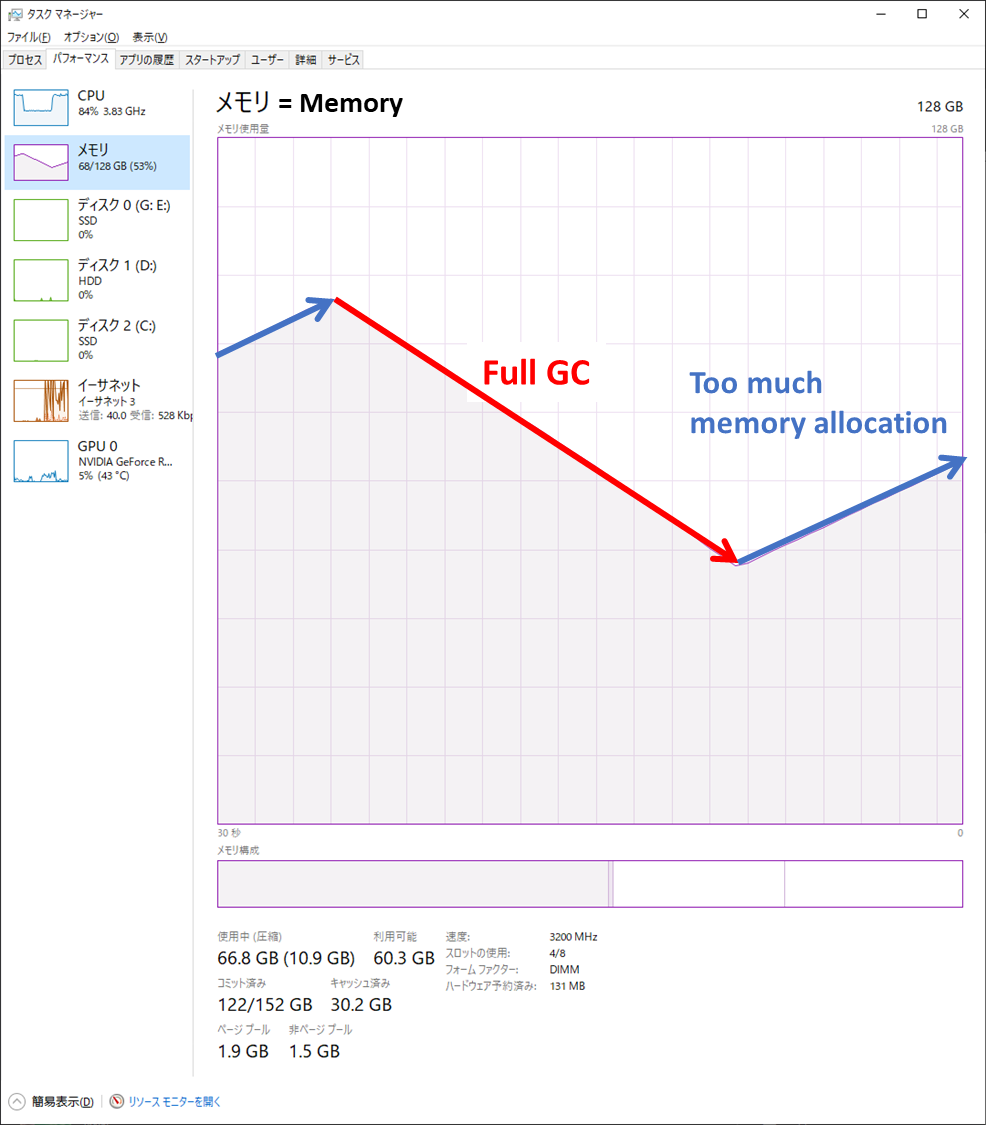

@stevengj can reproduce the memory consumption and GC behavior (as shown in the attached image above). I did |

|

I'm starting to think that maybe the behavior is specific to my environment... |

|

It looks like the By the way, it seems like you are trying to do a parallel reduction, but doing this with a spinlock seems very suboptimal. See e.g. this discussion. You might want to use a package like ThreadsX.jl, which provides efficient multi-threaded reductions. |

My application calculates a very large number of logbeta.

This resulted in periodic memory exhaustion and full GC as in the attached image.

I found that this was because logabsbeta returns a tuple.

The following is a survey.

First, I calculated the sum of logbeta using broadcast.

A large number of allocations occurred.

I suspected that this was due to the use of broadcasts.

So I tried multi-threading without using broadcast.

It's 7.6 times faster, but a large number of allocations are still occurring.

I tried a logbeta calculation that does not involve tuples.

It's 14.5 times faster than the second one, and this way was able to keep the allocation to a very small number.

Since SpecialFunctions.jl may be called very many times in an application, it would be appreciated if you could return primitive types as much as possible.

Best regards.

The text was updated successfully, but these errors were encountered: