You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

General purposes compiler can and do rewrite code as long as any observable effect is maintained. Unfortunately timing is not considered an observable effect and as general purpose compiler gets smarter and branch prediction on processor gets also smarter, compilers recognize and rewrite increasingly more initial branchless code to code with branches, potentially exposing secret data.

The assembly code generated needs special tooling for formal verification that is different from the C code in #6.

Recently Microsoft Research introduced Vale:

Vale: Verifying High-Performance Cryptographic Assembly Code

Barry Bond and Chris Hawblitzel, Microsoft Research; Manos Kapritsos, University of Michigan; K. Rustan M. Leino and Jacob R. Lorch, Microsoft Research; Bryan Parno, Carnegie Mellon University; Ashay Rane, The University of Texas at Austin;Srinath Setty, Microsoft Research; Laure Thompson, Cornell University https://www.usenix.org/system/files/conference/usenixsecurity17/sec17-bond.pdf https://github.com/project-everest/vale

Vale can be used to verify assembly crypto code against the architecture and also detect timing attacks.

Performance

Beyond security, compilers do not expose several primitives that are necessary for necessary for multiprecision arithmetic.

Add with carry, sub with borrow

The most egregious example is add with carry which led to the GMP team to implement everything in Assembly even though this is a most basic need and almost all processor have an ADC instruction, some like the 6502 from 30 years ago only have ADC and no ADD.

See:

Some specific platforms might expose add with carry, for example x86 but even then the code generation might be extremely poor: https://gcc.godbolt.org/z/2h768y

And no way to use ADC for ARM architectures with GCC.

Clang does offer __builtin_addcll which might work now or not as fixing the add with carry for x86 took years. Alternatively Clang does offer new arbitrary width integer since a month ago, called ExtInt http://blog.llvm.org/2020/04/the-new-clang-extint-feature-provides.html it is unknown however if code is guaranted to be constant-time.

Unlike add with carry which can be expressed but may lead to inefficient code, conditional move basically require assembly (see the security section) as there is no builtin at all.

A constant-time conditional move based on xor-masking would require 4-5 instructions instead of just 1.

MULX

On x86-64, the multiplication instruction is bottlenecked by the fact that the result is always in RAX and RDX registers which means that multiplications cannot be interleaved and exploit instruction level parallelism.

ADCX/ADOX

On x86-64, the add with carry instruction is bottlenecked by the fact that there is a single carry flag which means that additions with carry cannot be bottlenecked and exploit instruction level parallelism.

Furthermore, compilers like GCC and Clang were not designed to track carry chains (GCC) or can only track a single chain (Clang).

ADCX and ADOX enable having carry chains used respectively the Carry flag and the Overflow flag for carry chain.

The compiler is not able to distingusih between OF and CF chains,

since both are represented as a different mode of a single flags

register. This is the limitation of the compiler.

Unfortunately for both performance and security reasons, it is important for generic cryptographic libraries to implement a code generator.

The most wildly used code generators are:

perlasmwhich is the core of OpenSSL https://github.com/openssl/openssl/tree/OpenSSL_1_1_1-stable/crypto/perlasmqhasmfrom Bernstein https://cr.yp.to/qhasm.htmlInstead of complicating the build system, we can directly implement the code-generator using Nim metaprogramming features.

This ensures that unused assembly is not compiled in.

This significantly simplifies the build system.

A proof of concept code generator for multiprecision addition is available here

constantine/constantine/primitives/research/addcarry_subborrow_compiler.nim

Lines 34 to 77 in 3d1b1fa

Security

General purposes compiler can and do rewrite code as long as any observable effect is maintained. Unfortunately timing is not considered an observable effect and as general purpose compiler gets smarter and branch prediction on processor gets also smarter, compilers recognize and rewrite increasingly more initial branchless code to code with branches, potentially exposing secret data.

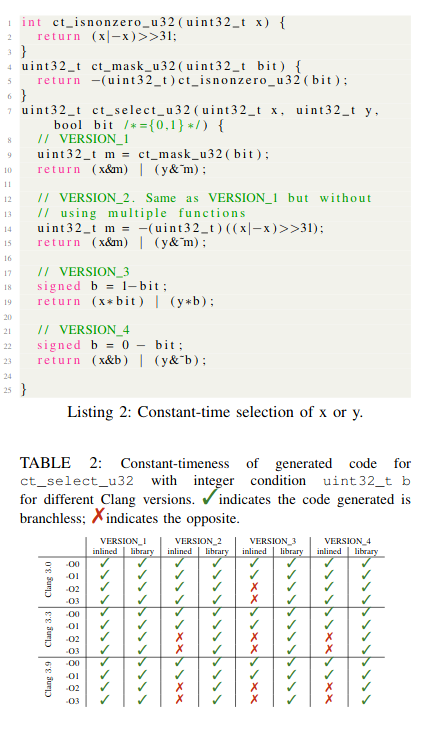

A typical example is conditional mov which is required to be constant-time any time secrets are involved (https://tools.ietf.org/html/draft-irtf-cfrg-hash-to-curve-08#section-4)

The paper

What you get is what you C: Controlling side effects in mainstream C compilers(https://www.cl.cam.ac.uk/~rja14/Papers/whatyouc.pdf) exposes how compiler "improvements" are detrimental to cryptographyAnother example is secure erasing secret data, which is often elided as an optimization.

Those are not theoretical exploits as explained in the

When constant-time doesn't save youarticle (https://research.kudelskisecurity.com/2017/01/16/when-constant-time-source-may-not-save-you/) which explains an attack against Curve25519 which was designed to be easily implemented in a constant-time manner.This attacks is due to an "optimization" in MSVC compiler

Verification of Assembly

The assembly code generated needs special tooling for formal verification that is different from the C code in #6.

Recently Microsoft Research introduced Vale:

Barry Bond and Chris Hawblitzel, Microsoft Research; Manos Kapritsos, University of Michigan; K. Rustan M. Leino and Jacob R. Lorch, Microsoft Research; Bryan Parno, Carnegie Mellon University; Ashay Rane, The University of Texas at Austin;Srinath Setty, Microsoft Research; Laure Thompson, Cornell University

https://www.usenix.org/system/files/conference/usenixsecurity17/sec17-bond.pdf

https://github.com/project-everest/vale

Vale can be used to verify assembly crypto code against the architecture and also detect timing attacks.

Performance

Beyond security, compilers do not expose several primitives that are necessary for necessary for multiprecision arithmetic.

Add with carry, sub with borrow

The most egregious example is add with carry which led to the GMP team to implement everything in Assembly even though this is a most basic need and almost all processor have an ADC instruction, some like the 6502 from 30 years ago only have ADC and no ADD.

See:

Some specific platforms might expose add with carry, for example x86 but even then the code generation might be extremely poor: https://gcc.godbolt.org/z/2h768y

GCC

Clang

(Reported fixed but it is not? https://gcc.gnu.org/bugzilla/show_bug.cgi?id=67317)

And no way to use ADC for ARM architectures with GCC.

Clang does offer

__builtin_addcllwhich might work now or not as fixing the add with carry for x86 took years. Alternatively Clang does offer new arbitrary width integer since a month ago, called ExtInt http://blog.llvm.org/2020/04/the-new-clang-extint-feature-provides.html it is unknown however if code is guaranted to be constant-time.See also: https://stackoverflow.com/questions/29029572/multi-word-addition-using-the-carry-flag/29212615

Conditional move

Unlike add with carry which can be expressed but may lead to inefficient code, conditional move basically require assembly (see the security section) as there is no builtin at all.

A constant-time conditional move based on xor-masking would require 4-5 instructions instead of just 1.

MULX

On x86-64, the multiplication instruction is bottlenecked by the fact that the result is always in RAX and RDX registers which means that multiplications cannot be interleaved and exploit instruction level parallelism.

ADCX/ADOX

On x86-64, the add with carry instruction is bottlenecked by the fact that there is a single carry flag which means that additions with carry cannot be bottlenecked and exploit instruction level parallelism.

Furthermore, compilers like GCC and Clang were not designed to track carry chains (GCC) or can only track a single chain (Clang).

ADCX and ADOX enable having carry chains used respectively the Carry flag and the Overflow flag for carry chain.

https://gcc.gnu.org/legacy-ml/gcc-help/2017-08/msg00100.html

https://bugs.llvm.org/show_bug.cgi?id=34249#c6

In MCL and Goff, combining MULX/ADCX and ADOX improves speed by about 20% on field multiplication.

See intel papers:

The text was updated successfully, but these errors were encountered: